Envoy — Building the Message Owl Stack

Envoy is a light-weight distributed agent developed with Go.

20th December 2017, This was the first product I did for ER (which is now re-branded to Oxiqa). The task I was given was to analyze the current system and suggest an implementation plan to provide a bulk messaging solution.

Dispatcher was a script previously implemented to meet the functionality of just sending SMS through an IPSEC VPN Tunnel with a single telecom SMSC.

At the beginning of the project, there was a simple ruby backend available for customers with just base minimum requirements although it wasn’t sufficient enough for a full solution. This project required something to act as a back-bone to support the platform for the distributed message delivery. We thought of a name and called it Envoy.

August 2017 — October 2017 the total number of messages sent were 3000 SMS and some of these numbers include test messages as well. However, due to the implementation and limitations in the SMPP library on Dispatcher; some messages which are marked as delivered were never delivered.

December 20 2017, Envoy was totally rebuilt from scratch using Go, focusing on implementing high availability and redundancy with a huge impact on performance.

Envoy can now handle an estimated 75,000 Recipients per 1 minute which surpassed the initial limitation of 3.3 minutes for 100 recipients possible on dispatcher. The estimated recipients above are considered without a load-balancing scenario; and during a load-balancing scenario to multiple SMSC’s can easily allow to go more than a threshold of over 100,000 Recipients. These numbers can go higher by increasing number of sessions to multiple teleco SMSC Servers.

Envoy helped increase the message traffic by 200% after it was launched in December 2017.

However, due to the issues with VPN connectivity and flaws in dispatcher; this script was never production ready as there was no queuing, high availability and fail over considerations. Some issues we faced due to the implementations in dispatcher are listed below and resulted in making Envoy.

1. Unavoidable delays due to below implementations

- 3 second sleep between messages (a single message will have varying number of recipients).

- 1 second sleep between same message sent to different recipients.

- 10 second sleep if a connection error happens between message sending.

- 1 second sleep between next fetch cycle from DB.

“These values are hard coded constants in the dispatcher script.”

Example: If Ahmed wants to send “testing 1” to 100 recipients and Mohamed wants to send “testing 2” to 2 recipients, then dispatcher would take 3.3 minutes to deliver the messages and Mohamed’s messages has to wait until Ahmed’s messages are delivered. Thus making the service unreliable for instant message delivery.

- A connection error will add an additional 10 seconds wait before next message is tried which is not taken into consideration in the above example.

- Failed messages are not retried in the same fetch cycle so if a message fails to send to a recipient it would only be tried in the next fetch cycle. Which means after processing all current messages.

2. PostgreSQL Database Locking

While messages are being processed messages table is locked with an explicit **ACCESS EXCLUSIVE MODE**. This is the most restrictive lock in PostgreSQL.

What It means is that while messages are being processed by dispatcher, no new messages can be added into Message Owl by any customer. “1 message to 100 recipients” would result in locking the table for 3.3 minutes and no user would be able to enter new messages into the system during this time. This time can increase from 30 mins to an hour or more depending on the recipients in each fetch cycle.

3. Logging

Dispatcher didn’t have an implementation for proper logging with timestamp.

The logs were filled with these:

TypeError: cannot concatenate 'str' and 'NoneType' objects

Traceback (most recent call last):

File "/root/messageowl-ooredoo-dispatcher/dispatcher.py", line 65, in <module>

print "[Success] From: " + message[1] + " To: " + recipient[1] + " " + message[2]Even the logged lines contain no timestamp information making it hard to debug issues.

Envoy

Envoy is built to fix aforementioned issues and cater to message delivery demands of our users. To remedy the shortcomings of previous implementation, we settled on few key aspects that would help pave the way.

- Implementation should be simple.

- It should be reliable.

- It should log enough data to figure out failures and cause.

- Must be able to load balance between different providers.

- Must be able to handle failures of upstream.

To make our implementation simpler we chose Go for its first class support for concurrency primitives.

Reaching to a reliability level we were comfortable with was a bit of a challenge. Even though SMPP is standardized, implementations often diverge in subtle ways. This makes debugging issues hard. We started by following SMPP v3.4 standard and then by adjusting our implementation to accommodate intricacies of upstream SMSCs.

For logging we did not utilize anything special. Go comes with standard logging facilities in its log standard package. Every log entry includes a timestamp along with contextual data enough to debug when issues arises.

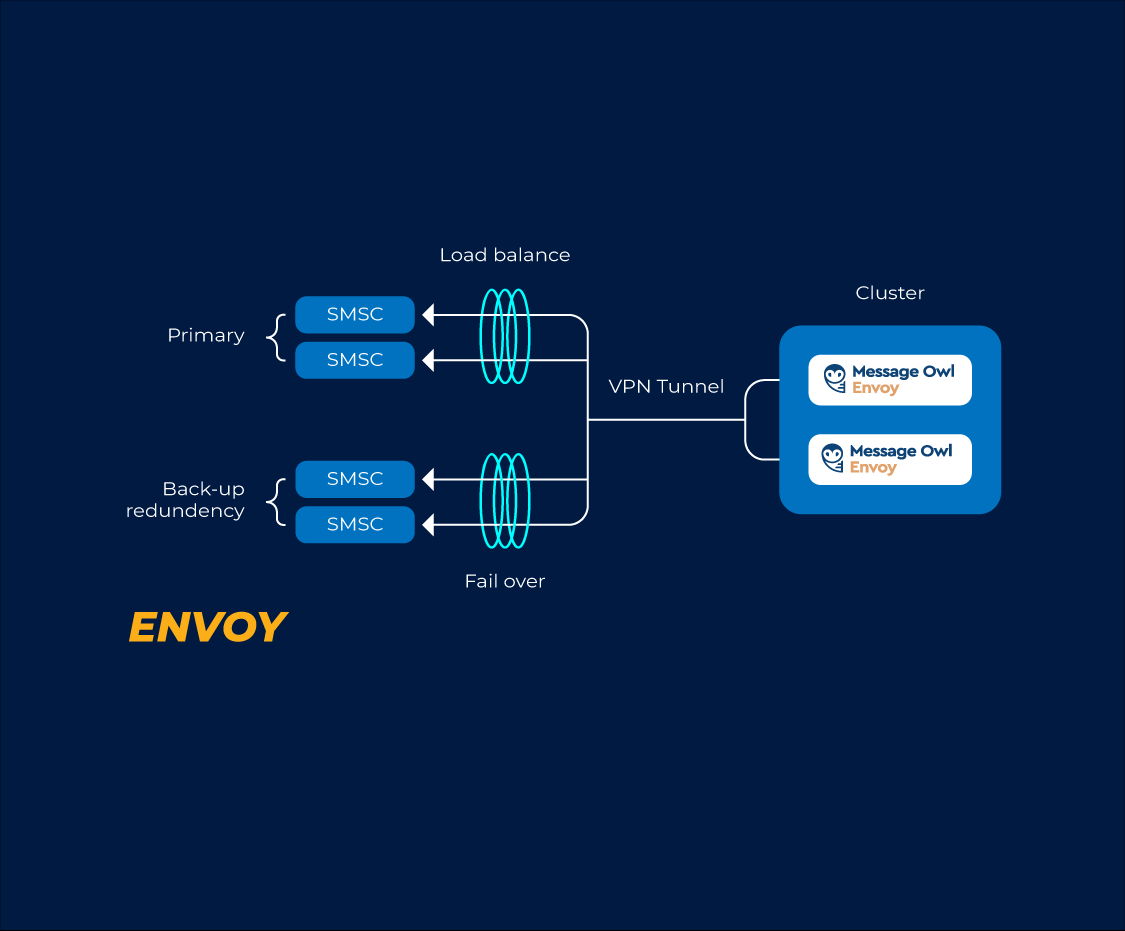

Load balancing and failure handling is dealt with route selection strategies implemented in Envoy. Before we go into route selection strategies, I think it’s important to highlight some of the key aspects of route selection common to all of them.

- A route is considered

BUSYif it's in the middle of handing off a message to upstream. - A route is considered

DOWNwhen the SMPP connection to upstream is down. This could be due to many different reasons.

We have a concept of route exceptions which are set per message. A route is added to exceptions when a message fails to send on a certain route while it’s not DOWN. Failed message is rescheduled for the next cycle and in next cycle failed route is not tried.

Routes also have a concept of priority which is defined in configuration. Priorities are just arbitrary numbers. Higher numbers are high priority. Routes are sorted based on these depending on the selected strategy. Even though a route might have a high priority it sinks to bottom of the list if status is set to DOWN

Envoy includes three different failure and load balancing strategies.

1. Load Balance

This strategy simply tries the next route if current route is busy or down, based on the next prioritized route.

2. Failover

This strategy is similar to load balance strategy. It doest not penalize the routes that are in busy status. Fail over strategy only considers other routes if they are marked DOWN

3. Smart Load Balance Failover

This is a combination of both aforementioned strategies. This strategy load balances between same priority routes and fails over to others if higher priority routes are down. Even in failure cases this strategy will load balance between the next priority level.

Message Owl Stack

It was essential for the Message Owl Stack to be developed for this product to standout as an enterprise bulk messaging solution. I will talk a bit about Envisage in a future post (Big-data framework implementation to support AI/ML in the future and for generating reports).

note: Credits to my colleague, Ismail Kaleem for fixing VPN connectivity issues